Stop Compromising.

Start Deploying

Mission-ready, cost-effective, high-efficiency deployment platforms for AI applications moving out to the edge.

2022 Best Edge AI Processor Blaize® Pathfinder® P1600 Embedded System on Module

The Edge AI and Vision Alliance announced Blaize as winner of the 2022 Edge AI and Vision Product of the Year. The award recognizes the innovation and excellence of the industry’s leading technology companies that are enabling visual AI and computer vision in this rapidly growing field.

Edge AI & Vision Announcement Watch the video Press release

Accelerate edge AI apps with up to 60X higher system-level efficiency vs. CPU/GPU

With form factors for every need based on the revolutionary Blaize® GSP® architecture, Blaize brings a new class of efficient, low power, low latency processing that enables real-time applications previously impossible for AI Inference at the Edge.

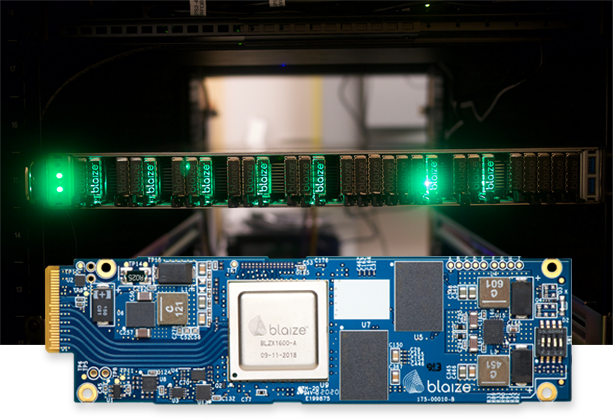

Blaize® Xplorer® X1600E EDSFF Small Form Factor Accelerator

Features

- 1 Blaize 1600 SoC with 16 GSP cores, providing 16 TOPs

- Enterprise grade

- Soft ISP available to run on Blaize 1600 SoC

- 4 GB LPDDR4

- PCIe Gen 3.0, 4 lanes

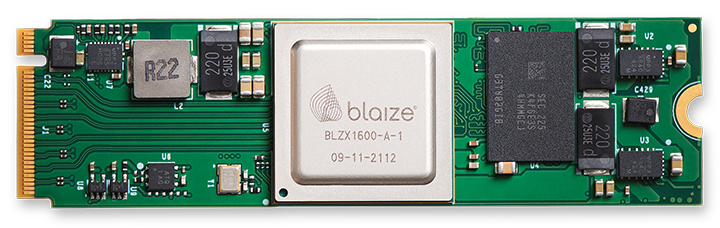

Blaize® Xplorer® X600M M.2 Small Form Factor Accelerator Platform

Features

- 1 Blaize 1600 SoC with 16 GSP cores

- H.265/H.264 Video Encode / Decode up to 4K@30FPS

- Commercial grade

- 2 GB LPDDR4

- PCIe Gen 3.0, 4 lanes

Blaize® Xplorer® X1600P PCIe Accelerator

Features

- 1 Blaize 1600 SoC with 16 GSP cores, providing 16 TOPs

- Commercial grade

- Soft ISP available to run on Blaize 1600 SoC

- 4 GB LPDDR4

- PCIe Gen 3.0, 4 lanes Get Product Brief Watch Demo

Blaize® Xplorer® X1600P-Q PCIe Accelerator

Features

- Four Blaize 1600 SoCs each with 16 GSP cores, providing 64-80 TOPs

- Soft ISP available to run on Blaize 1600 SoC

- 16 GB LPDDR4

- PCIe Gen 3.0, 16 lanes Get Product Brief

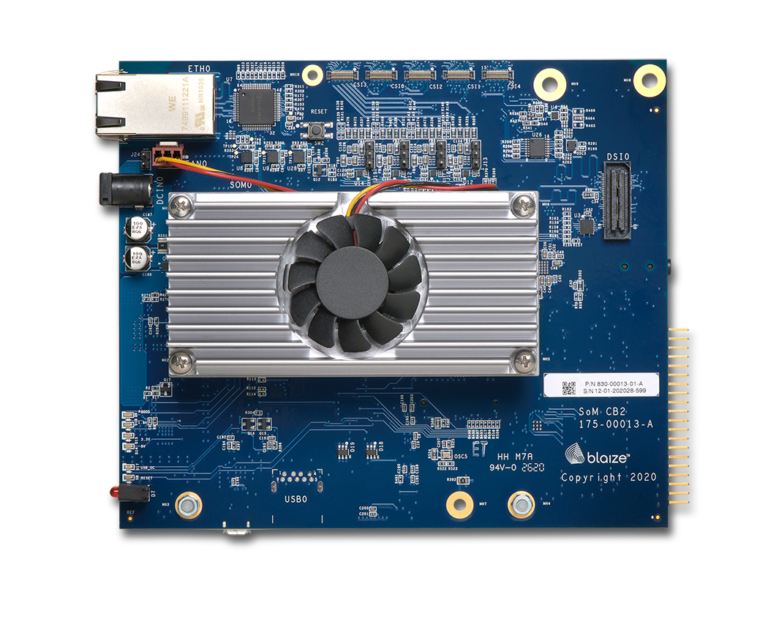

Blaize® Pathfinder® P1600 Embedded System on Module

Features

- 1 Blaize 1600 SoC with 16 GSP cores, providing 16 TOPs

- Dual ARM Cortex A53 processors

- MIPI CSI camera interfaces

- MIPI DSI display interfaces

- H.264/H.265 encoder and decode

- Soft ISP available to run on Blaize 1600 SoC

- Commercial and industrial grade

- 4 GB LPDDR4

- PCIe Gen 3.0, 4 lanes

Blaize® Pathfinder® 1600-DK Embedded Kit

Features

- Blaize Pathfinder P1600 System on Module

- Carrier Board

- Power Supply

- Micro-USB Cable

- x2 MIPI CSI-2 HD Cameras

- SD Card

- Ethernet Cable

- Carrier Board Jumpers and assembly hardware

- DSI to HDMI adapter

- HDMI cable

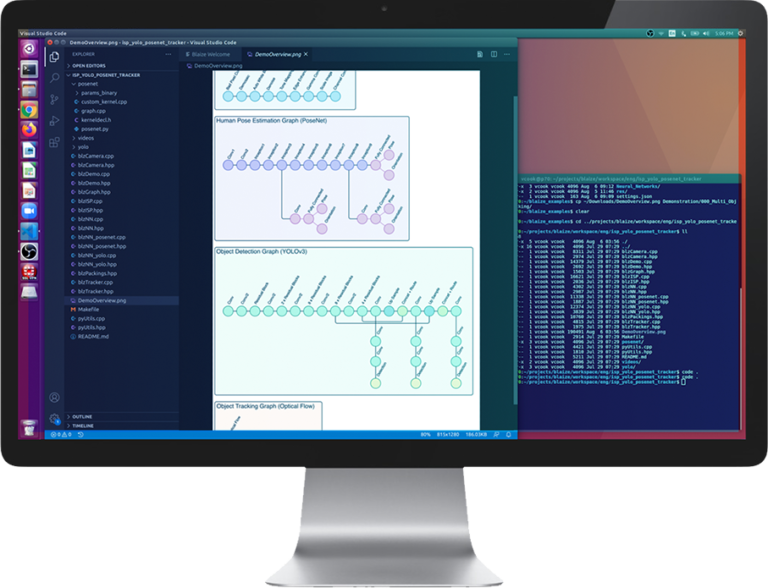

Programmability to Build Complete AI Apps, Keep Pace with Rapid Evolution of AI Models

All Blaize GSP-based systems are fully programmable via the Blaize® Picasso® Software Development Platform. The hardware and software are purpose-built to enable developers to build entire AI applications optimized for edge deployment constraints that can run efficiently in a complete streaming fashion, as well as continuously iterate to keep up with rapid evolutions in neural networks.

Get Whitepaper